Enterprise Agentic Architecture: Decision Framework for Production

Intelligence DispatchesJanuary 21, 20268 min read

Enterprise Agentic Architecture: Decision Framework for Production

Most agentic AI projects stall at demo because teams optimize for the wrong things. This architectural decision framework separates shipped products from abandoned experiments.

🎯

Reading Goal

Walk away with a decision framework and maturity assessment for your agentic AI initiative.

Enterprise Agentic Architecture: Decision Framework for Production

TL;DR: The question isn't "how do I build agentic AI?" It's "should I, and at what maturity level?" This framework helps you decide if you're building a solo acoustic act or a full symphony orchestra—and how to architect for the performance you actually need.

The Studio Session: From Demo to Production

The studio monitors are humming. It's 2 AM, and your prototype just nailed the perfect run. It reasoned, it executed, it delivered. You feel that rush—the same one I get when a track finally comes together after hours of mixing.

But here's the cold reality check: A great demo is like a great solo in the shower. It's not ready for the stadium tour.

Every enterprise leader I talk to shares the same struggle:

"We built a demo in two weeks. Six months later, we're still debating how to deploy it."

The problem isn't the code. It's the composition. You're trying to score a film before you've written the main theme. This guide isn't about the syntax of agents; it's about the rhythm of production. It teaches you when to let the agents improvise, when to force them to follow the sheet music, and how to build a stage that doesn't collapse under the weight of the performance.

The Producer's Board: A Decision Framework

Before we lay down a single track of code, we need to look at the mixing board. Adjust your levels based on these four faders:

Your answers here define your lineup. Don't hire a symphony when you just need a DJ.

The Four-Tier Maturity Model (From Solo to Symphony)

Most teams over-engineer because they try to go straight to the symphony without learning to play the instrument first. Let's look at the progression.

Critical insight: Most of you should be rocking Tier 1 or 2. Tier 3 and 4 are for when you've sold out the stadium.

Reference Architecture: The Six-Plane Stage

Whether you're playing a dive bar or Wembley, you need a stage, instruments, and sound. In agentic systems, we call this the Six-Plane Model.

Architectural Decisions (The Rider)

Every tour has a rider—the non-negotiables. Here are the Architectural Decision Records (ADRs) you need for each level of fame.

Tier 1 → Tier 2: Going Autonomous

| Decision | Context | Choice | Trade-off |

|---|---|---|---|

| Orchestration | Tracking the song structure | Graph-based state machine | +Explicit rhythm / -Takes time to compose |

| State Management | Keeping everyone in tune | Typed state contracts (Pydantic) | +No bad notes / -More prep work |

| Error Handling | The show must go on | Retry limits + exponential backoff | +Resilience / -Can slow the tempo |

| Quality Gates | Catching bad takes | Multi-stage validation | +Quality / -Latency |

Tier 2 → Tier 3: The Ensemble

| Decision | Context | Choice | Trade-off |

|---|---|---|---|

| Agent Selection | Who plays the solo? | Model routing layer | +Best sound per instrument / -Complex wiring |

| Handoff Protocol | Passing the melody | Structured handoff schema | +Clarity / -Overhead |

| Failure Isolation | Broken string? | Circuit breaker per agent | +Band keeps playing / -One part missing |

| Human Checkpoints | The Producer's final listen | Async approval queue | +Safety / -Wait time |

Tier 3 → Tier 4: The Label Platform

| Decision | Context | Choice | Trade-off |

|---|---|---|---|

| Multi-Tenancy | Multiple bands, one studio | Namespace isolation | +Efficiency / -Noisy neighbors |

| Self-Service | DIY recording | Declarative workflow specs | +Speed / -Governance |

| Cost Allocation | Who pays for the studio time? | Per-workflow token tracking | +Accountability / -Instrumentation cost |

The Cost-Complexity Curve

Don't buy a stadium sound system for a coffee shop gig. This is where budgets die.

When NOT to Use Agentic AI

Sometimes, you don't need AI. You just need a spreadsheet.

| Your Situation | Agentic AI? | Better Alternative |

|---|---|---|

| Workflow has < 3 steps | No | Simple API calls |

| Predictable inputs & outputs | No | Traditional automation |

| No budget for rehearsals | No | Pre-built SaaS tools |

| Zero tolerance for wrong notes | No | Human-only workflow |

| Novel situations requiring improv | Yes | This is the jam. |

Failure Mode Analysis (The Feedback Loop)

When the mic feeds back, it ruins the show. Here's how to stop the screeching before it starts.

| Failure Mode | The Symptom | The Fix |

|---|---|---|

| Infinite Loop | The agent gets stuck on a riff | MAX_RETRIES=3 + backoff |

| State Corruption | The band is playing different songs | Pydantic validation on every bar |

| Silent Failure | The solo ended but no one clapped | Multi-stage output validation |

| Hallucination Cascade | One wrong note ruins the chord | Cross-agent fact-checking |

| Cost Explosion | The studio bill is astronomical | Token budgets + auto-throttle |

Implementation Roadmap (The Rehearsal Schedule)

Don't book the gig until you've practiced. Here's your schedule:

Code Example: State Contract (Tier 2+)

from pydantic import BaseModel, Field

from enum import Enum

from datetime import datetime

from typing import Optional

class WorkflowState(str, Enum):

"""The song structure—everyone needs to know the key and time signature."""

PENDING = "pending"

RESEARCHING = "researching"

DRAFTING = "drafting"

REVIEWING = "reviewing"

APPROVED = "approved"

FAILED = "failed"

class ResearchResult(BaseModel):

"""Each section needs its own sheet music."""

sources: list[str] = Field(..., min_items=1)

summary: str = Field(..., min_length=50, max_length=500)

confidence: float = Field(..., ge=0.0, le=1.0)

class AgentWorkflowState(BaseModel):

"""The state contract—validation on every transition."""

workflow_id: str

current_state: WorkflowState = WorkflowState.PENDING

research_result: Optional[ResearchResult] = None

draft_content: Optional[str] = None

retry_count: int = 0

created_at: datetime = Field(default_factory=datetime.utcnow)

token_budget_remaining: int = Field(default=10000)

def transition_to(self, new_state: WorkflowState) -> None:

"""Explicit state transitions prevent silent corruption."""

valid_transitions = {

WorkflowState.PENDING: [WorkflowState.RESEARCHING],

WorkflowState.RESEARCHING: [WorkflowState.DRAFTING, WorkflowState.FAILED],

WorkflowState.DRAFTING: [WorkflowState.REVIEWING, WorkflowState.FAILED],

WorkflowState.REVIEWING: [WorkflowState.APPROVED, WorkflowState.FAILED],

}

if new_state not in valid_transitions.get(self.current_state, []):

raise ValueError(f"Cannot transition from {self.current_state} to {new_state}")

self.current_state = new_state

This is your state contract—it keeps everyone in tune. Pydantic validates on every bar change, and explicit transitions prevent the "wrong song" problem.

Framework Comparison

| Framework | Best For | The Vibe | Tier Match |

|---|---|---|---|

| Claude SDK | Native apps, prototyping | Indie Rock | Tier 1-2 |

| LangGraph | Complex state machines | Prog Rock | Tier 2-3 |

| CrewAI | Role-based collaboration | Jazz Improv | Tier 2-3 |

| OCI Agent Platform | Enterprise governance | Symphony Hall | Tier 3-4 |

Frank's Take: Start with the Claude SDK or LangGraph. Don't rent the Symphony Hall until you can fill the seats.

FAQ: Studio Notes

Q: Does this actually save money? Only if the work requires improvisation. If it's routine, use a script. If it requires judgment (handling the 20% of exceptions), then yes—agents are your affordable session musicians.

Q: How do I stop hallucinations? Three layers of soundproofing: (1) Constrain the tools (don't give the drummer a trumpet). (2) Verify the output (listen to the playback). (3) Human approval for the hit singles (high stakes).

Q: Minimum team size? Tier 1: You on a laptop. Tier 2: You and a dedicated engineer. Tier 3: A small band (3-5 devs). Tier 4: A full production crew (10+).

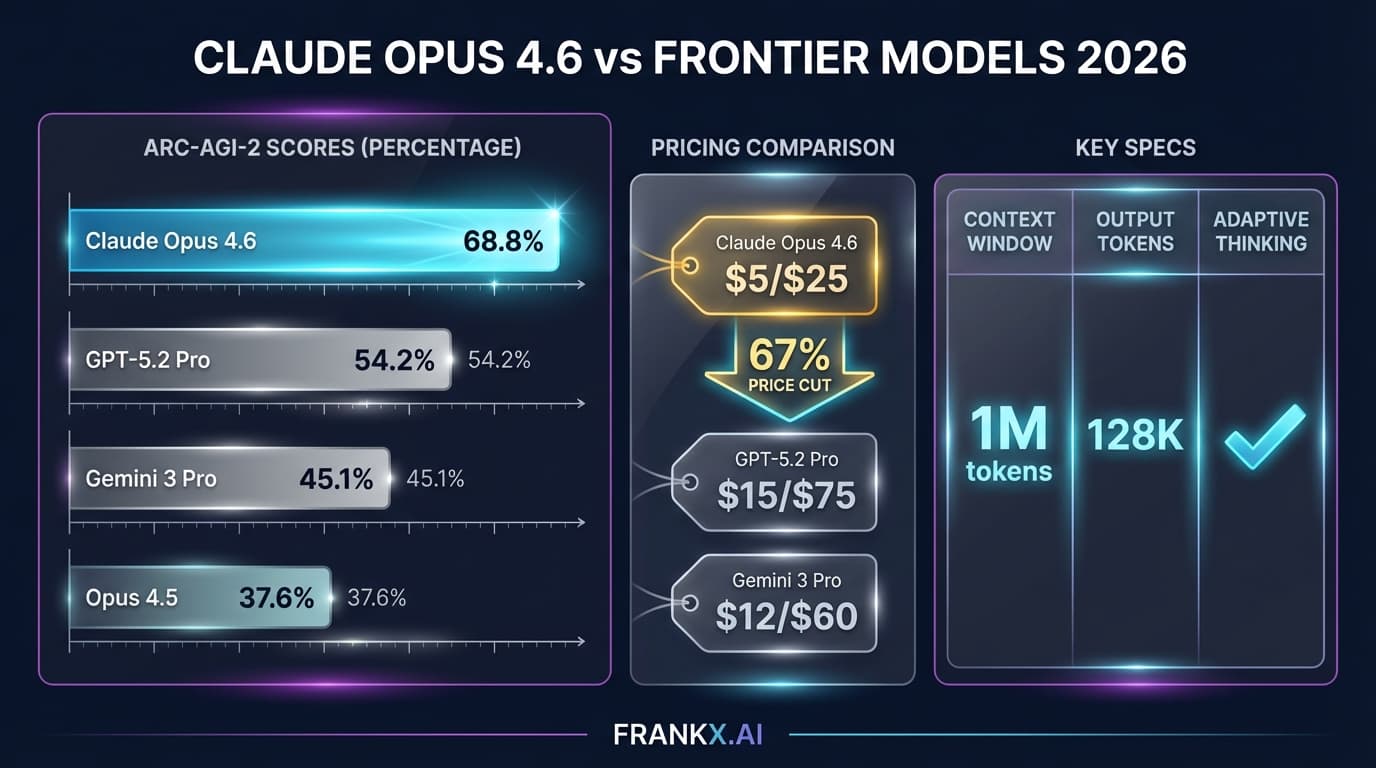

Q: Same model for everyone? No. You don't use a Stradivarius to hammer a nail. Use cheaper models for simple tasks (classification) and the heavy hitters (Claude 3.5 Sonnet, GPT-4o) for the complex solos.

Q: How do I test this? Unit tests for the notes. Integration tests for the melody. Golden set evaluations for the soul. Never test against live LLMs in your CI pipeline—that's like rehearsing during the show.

Your Next Step

- Check your tier: Are you a soloist or a conductor?

- Set the stage: Answer the decision framework.

- Start simple: You can always add instruments later. Removing them is harder.

Ready to build your ensemble? Check out the Architecture Blueprint to see the full setup, or explore the Enterprise Agent Roadmap to plan your tour.

For deep research on these patterns, dive into our AI CoE Hub.

Let's make some noise.

Related Articles

- Production LLM Agents on OCI: Architecture — Full blueprint for enterprise deployment

- Multi-Agent Orchestration Patterns — Coordination strategies that scale

- What is Agentic AI? — The fundamentals explained

Related Research

Read on FrankX.AI — AI Architecture, Music & Creator Intelligence

Weekly Intelligence

Stay in the intelligence loop

Join 1,000+ creators and architects receiving weekly field notes on AI systems, production patterns, and builder strategy.

No spam. Unsubscribe anytime.