The 7 Pillars of Production Agent Systems: What Actually Matters in 2026

AI ArchitectureJanuary 20, 20269 min read

The 7 Pillars of Production Agent Systems: What Actually Matters in 2026

2026 marks the shift from AI demos to production deployments. Here's the architectural framework that emerged from analyzing AWS, Azure, Google Cloud, OpenAI, Anthropic, and Oracle's approaches to production-ready AI agents.

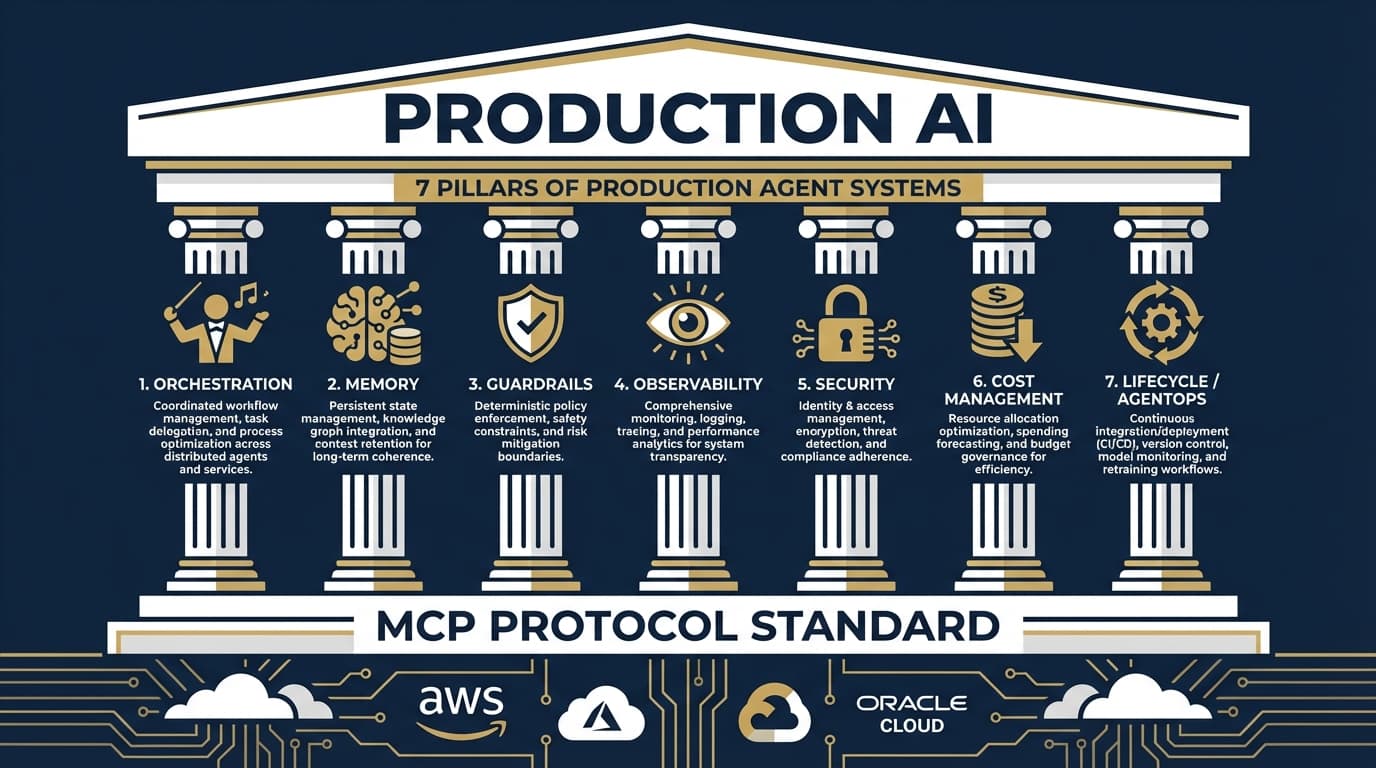

TL;DR: Production AI agents require 7 architectural pillars: orchestration, memory, guardrails, observability, security, cost management, and lifecycle management (AgentOps). Major cloud providers and API companies have converged on similar patterns while differentiating on ecosystem integration. Model Context Protocol (MCP) has emerged as the unifying standard for agent-tool integration, now under Linux Foundation governance. This framework helps you evaluate any agent platform or design your own production systems.

The Agent Leap is Here

"The era of simple prompts is over. We're witnessing the agent leap—where AI orchestrates complex, end-to-end workflows semi-autonomously." — Google Cloud AI Agent Trends 2026

Gartner predicts 40% of enterprise applications will include task-specific AI agents by end of 2026, up from less than 5% today.

But here's what nobody tells you: demos are easy, production is hard.

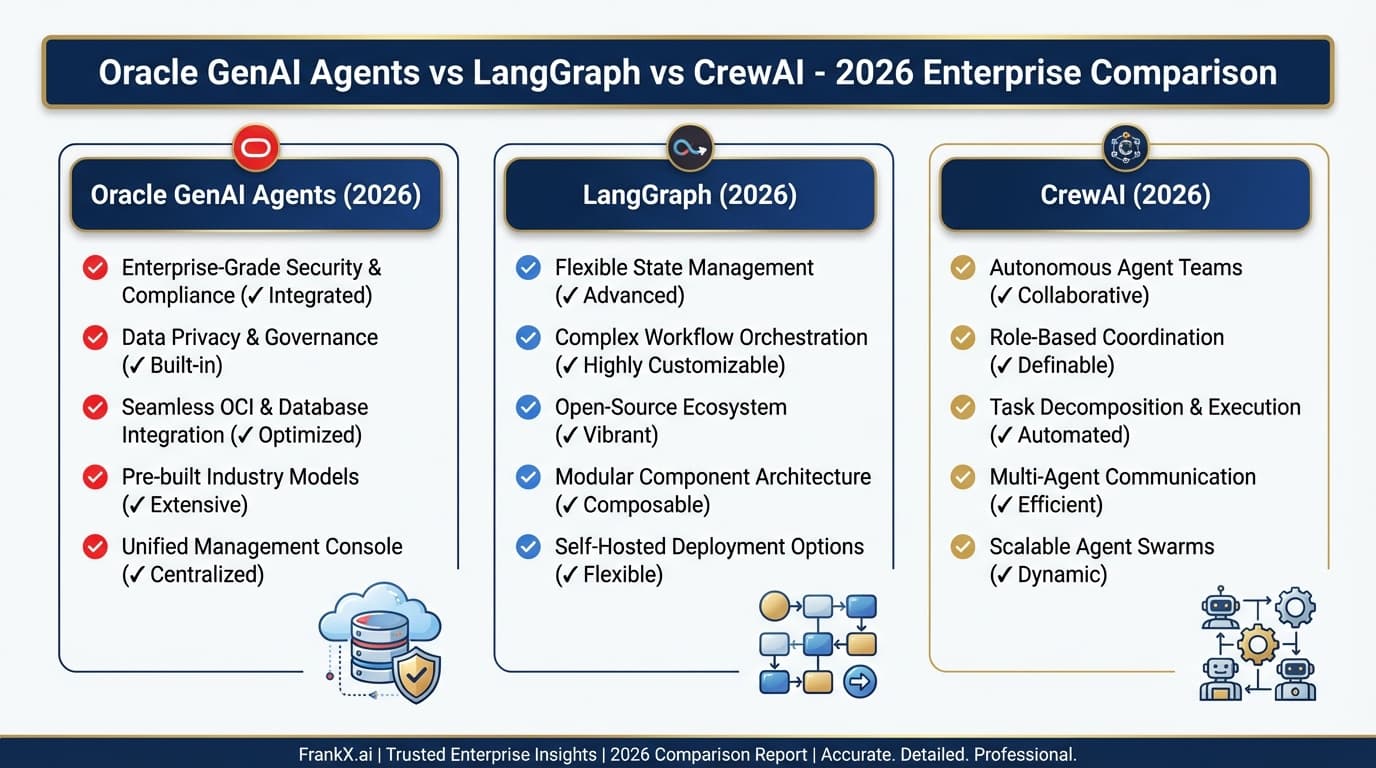

After analyzing production patterns across AWS Bedrock/AgentCore, Google Vertex AI/Agent Engine, Azure AI Foundry, OpenAI Agents SDK, Anthropic Claude Agent SDK, and Oracle ADK, I've identified 7 universal pillars that separate AI prototypes from production systems.

The 7 Pillars Framework

| Pillar | What It Solves | Why It Matters |

|---|---|---|

| 1. Orchestration | Multi-agent coordination | Agents rarely work alone |

| 2. Memory | State persistence | Stateless agents can't maintain context |

| 3. Guardrails | Safety and validation | Production demands predictable behavior |

| 4. Observability | Visibility and debugging | Can't improve what you can't measure |

| 5. Security | Access control and audit | Agents with tools are attack surfaces |

| 6. Cost Management | Token and resource budgets | Agentic workflows consume 10-100x more |

| 7. Lifecycle (AgentOps) | CI/CD for agents | Agents are software, treat them that way |

Let's break down each pillar.

Pillar 1: Orchestration

The Problem: Single agents hit capability ceilings. Complex tasks require specialized skills, different tools, and coordinated workflows.

The Solution: Multi-agent orchestration patterns.

Common Patterns

Supervisor-Specialist

Swarm (Parallel)

Sequential Pipeline

Input → Agent 1 → Agent 2 → Agent 3 → Output

Provider Implementations

| Provider | Orchestration Approach |

|---|---|

| AWS | Strands framework (Swarms, Agent Graphs, Workflows) |

| Agent2Agent protocol (50+ partners) | |

| Azure | Foundry Agent Service orchestration |

| OpenAI | Handoffs in Agents SDK |

| Anthropic | Hooks system for control flow |

| Oracle | ADK multi-agent patterns + Select AI Agents in DB |

Pillar 2: Memory

The Problem: LLMs are stateless. Without memory, agents can't learn, can't remember context across sessions, and can't maintain coherent multi-turn interactions.

The Solution: Multi-tier memory architecture.

| Memory Type | Scope | Use Case |

|---|---|---|

| Short-term | Single session | Conversation context |

| Long-term | Across sessions | User preferences, learned patterns |

| Episodic | Historical | "Remember when we..." |

| Semantic | Factual | Business rules, domain knowledge |

Provider Implementations

- AWS: AgentCore Memory (managed, short/long-term, semantic extraction)

- Google: Agent Engine Sessions (GA), Memory Bank

- Azure: Foundry session management

- OpenAI: Context management in SDK

- Anthropic: Project context (CLAUDE.md), memory features

- Oracle: Native in Database 26ai (AI Vector Search + structured data)

Key insight: You need ALL memory types. Managed memory services are now table stakes.

Pillar 3: Guardrails

The Problem: LLMs hallucinate, can be jailbroken, and might expose sensitive data. Production systems need predictable, safe behavior.

The Solution: Input/output guardrails at every layer.

Golden Rule (from AWS): Business logic should reside OUTSIDE the model.

LLM plans → JSON action plan → Action Router validates → Execute

Pillar 4: Observability

The Problem: Multi-step agent workflows are black boxes. When something fails, you have no idea why.

The Solution: Comprehensive tracing, logging, and evaluation.

What to Track (Azure's Framework)

- Intent Resolution: Did the agent understand the request?

- Task Adherence: Did the agent follow instructions?

- Tool Call Accuracy: Right tools, right parameters?

- Response Completeness: Was the answer sufficient?

Provider Implementations

| Provider | Observability Approach |

|---|---|

| AWS | CloudWatch + X-Ray integration |

| Cloud Trace + Agent Engine metrics | |

| Azure | Application Insights + Foundry evaluators |

| OpenAI | Built-in tracing dashboard |

| Anthropic | Hooks (PreToolUse, PostToolUse) |

| Oracle | OCI Monitoring + Database audit |

Pillar 5: Security

The Problem: Agents with tool access are attack surfaces. They can read data, call APIs, and execute actions. Who controls what they can do?

The Solution: Agent Identity and fine-grained permissions.

New Concept: Agent IAM

In 2026, agents get their own identities:

- Agents have IAM identities (GCP and Azure leading)

- Tool access controlled via IAM policies

- Audit trails tied to agent identity

- OAuth-based tool authentication

This solves: "Which agent called which tool with whose permissions?"

MCP Security Model

Model Context Protocol now includes enterprise security:

- Approval workflows (Cloudflare)

- Identity-layer integration (Auth0)

- Observability (New Relic)

- Governance (under Linux Foundation AAIF)

Pillar 6: Cost Management

The Problem: Agentic workflows consume 10-100x more tokens than single-shot calls. Reasoning loops, tool calls, and multi-agent coordination add up fast.

The Solution: Multi-strategy cost optimization.

| Strategy | Savings | When to Use |

|---|---|---|

| Prompt caching | 50-90% | Stable system prompts, RAG context |

| Token budgets | Variable | Per-session limits |

| Model tiering | 10-50x | Route simple queries to smaller models |

| Batch processing | 50%+ | Non-real-time workflows |

| Background mode | Operational | Long-running tasks |

Provider Features

- OpenAI: Prompt caching, background mode

- Anthropic: Automatic prompt caching

- AWS: Provisioned throughput, on-demand

- Google: Agent Engine pricing (updated Jan 2026)

- Azure: Reserved capacity

- Oracle: Reserved capacity, predictable pricing (no surprise egress)

Pillar 7: Lifecycle Management (AgentOps)

The Problem: Agents are software. They need versioning, testing, deployment, monitoring, and rollback—just like any production system.

The Solution: AgentOps practices.

Azure's AI Red Teaming Agent

Unique and valuable: simulate adversarial attacks BEFORE deployment.

CI/CD Integration

Azure AI Foundry leads here:

- GitHub Actions / Azure DevOps extensions

- Auto-evaluate agents on every commit

- Compare versions with built-in metrics

- Confidence intervals and significance tests

MCP: The Unifying Standard

Model Context Protocol has become the de facto standard for agent-tool integration.

Timeline:

- November 2024: Anthropic introduces MCP

- 2025: OpenAI and Google DeepMind adopt

- December 2025: Linux Foundation's AAIF takes governance

- 2026: Enterprise-ready with security, governance, approval workflows

Why it matters: Build MCP servers once, use with ANY agent framework.

Provider Comparison Matrix

| Pillar | AWS | Azure | OpenAI | Anthropic | Oracle | |

|---|---|---|---|---|---|---|

| Orchestration | ★★★★★ | ★★★★☆ | ★★★★☆ | ★★★☆☆ | ★★★☆☆ | ★★★★☆ |

| Memory | ★★★★★ | ★★★★☆ | ★★★☆☆ | ★★★☆☆ | ★★★☆☆ | ★★★★★ |

| Guardrails | ★★★★★ | ★★★★☆ | ★★★★☆ | ★★★★☆ | ★★★★☆ | ★★★★☆ |

| Observability | ★★★★☆ | ★★★★☆ | ★★★★★ | ★★★★☆ | ★★★☆☆ | ★★★★☆ |

| Security | ★★★★★ | ★★★★★ | ★★★★★ | ★★★☆☆ | ★★★☆☆ | ★★★★★ |

| Cost Mgmt | ★★★★☆ | ★★★★☆ | ★★★★☆ | ★★★★☆ | ★★★★☆ | ★★★★★ |

| AgentOps | ★★★★☆ | ★★★★☆ | ★★★★★ | ★★★☆☆ | ★★★☆☆ | ★★★★☆ |

What's Next

This is Part 1 of an 8-part series on Production Agent Patterns:

- The 7 Pillars of Production Agent Systems (this post)

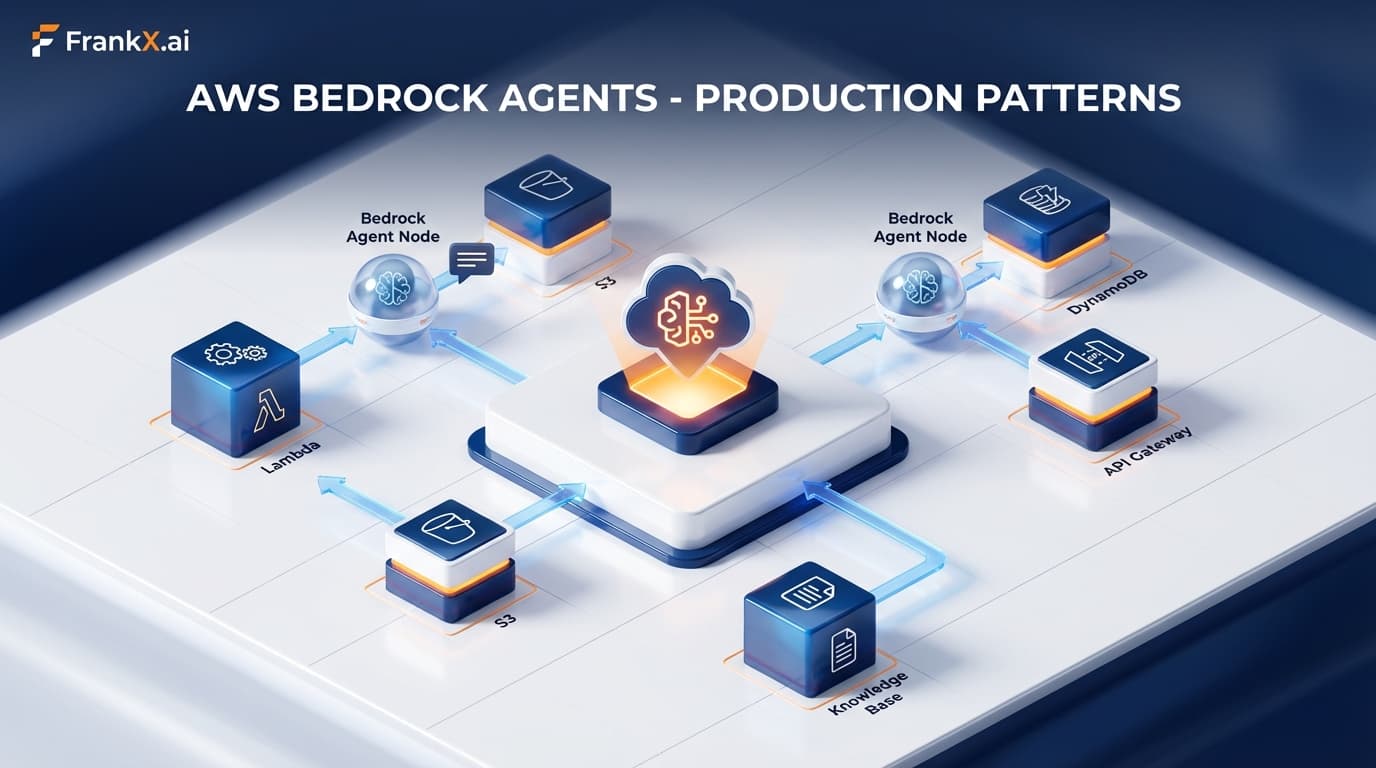

- AWS Bedrock AgentCore Deep Dive

- Google Vertex AI Agent Engine Deep Dive

- Azure AI Foundry Deep Dive

- OpenAI Agents SDK Deep Dive

- Claude Agent SDK Deep Dive

- MCP - The Unifying Standard

- Choosing Your Stack (Decision Guide)

Subscribe to get the next installments.

FAQ

What makes an AI agent "production-ready"?

Production-ready agents address all 7 pillars: they have proper orchestration for complex tasks, persistent memory for context, guardrails for safety, observability for debugging, security for access control, cost management for sustainability, and proper lifecycle management for deployment and updates.

How are AI agents different from simple LLM calls?

Simple LLM calls are stateless, single-turn interactions. Agents maintain state, use tools, make decisions across multiple steps, and coordinate with other agents. This complexity requires the architectural patterns described in this framework.

What's the biggest challenge in agent deployment?

Observability. Multi-step agent workflows are inherently difficult to debug. When an agent fails at step 17 of a 25-step workflow, understanding what went wrong requires comprehensive tracing that most teams don't implement from the start.

Do I need cloud infrastructure for production agents?

For enterprise production workloads, yes. Managed services from AWS, Google, Azure, or Oracle provide the security, scalability, and observability infrastructure that's impractical to build yourself. For smaller workloads, API-based solutions from OpenAI or Anthropic work well.

What is AgentOps?

AgentOps is the extension of DevOps practices to AI agents. It includes development environments, testing (including adversarial red-teaming), staged deployment, continuous monitoring, automated evaluation, and the ability to rollback when agents misbehave.

Sources

- AWS Multi-Agent Orchestration Guidance

- Google AI Agent Trends 2026

- Azure Agent Observability Best Practices

- OpenAI Agents SDK

- Anthropic Claude Agent SDK

- Model Context Protocol

- Oracle Database 26ai

Related Articles

- Production LLM Agents on OCI — Enterprise deployment architecture

- Production Agent Patterns: AWS Bedrock — AWS implementation guide

- Multi-Agent Orchestration Patterns — Coordination at scale

Related Research

Read on FrankX.AI — AI Architecture, Music & Creator Intelligence

Weekly Intelligence

Stay in the intelligence loop

Join 1,000+ creators and architects receiving weekly field notes on AI systems, production patterns, and builder strategy.

No spam. Unsubscribe anytime.